That’s my first post on this blog, and as such–in regards to the blog’s title–it actually falls into the “mostly…” category. It’s about a short paper we recently got accepted at IEEE Visualization with Martin Weier and Ingo Wald and is called Accelerating Force-Directed Graph Drawing with RT Cores.

Here’s a link to that paper on researchgate: https://www.researchgate.net/publication/343904020_Accelerating_Force-Directed_Graph_Drawing_with_RT_Cores

The idea is to use NVIDIA’s RTX ray tracing extensions for something other than graphics and rendering and is motivated by this paper where the authors used RTX to accelerate point-in-tet queries.

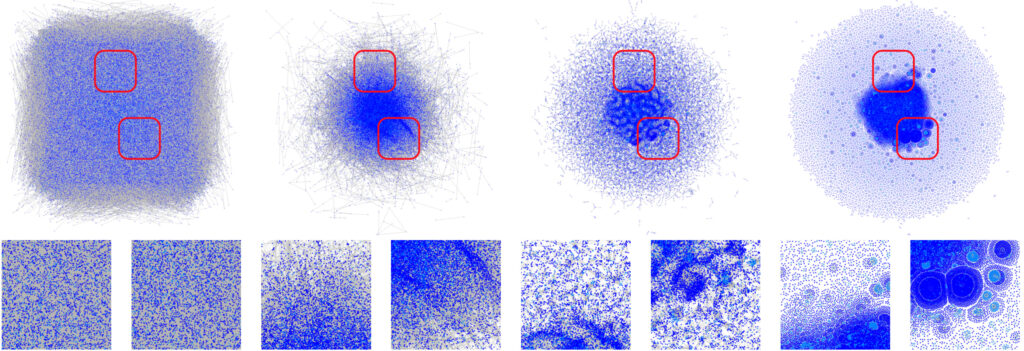

In this paper, we use RTX to accelerate force-directed graph drawing, which is concerned with finding nice layouts for (usually 2D) graphs; nice means–amongst others–that there aren’t too many edge crossings and generally that vertices that are connected by edges are relatively close-by. The most simple force-directed algorithms treat the graph vertices as particles, with mutual forces acting on each particle and the overall, physically inspired system trying to reach equilibrium w.r.t. those forces.

At the heart of the force-directed algorithm is a nearest-neighbor query where we find–for each vertex/particle in parallel–all the neighbors within a certain radius, and then compute repulsive forces from them.

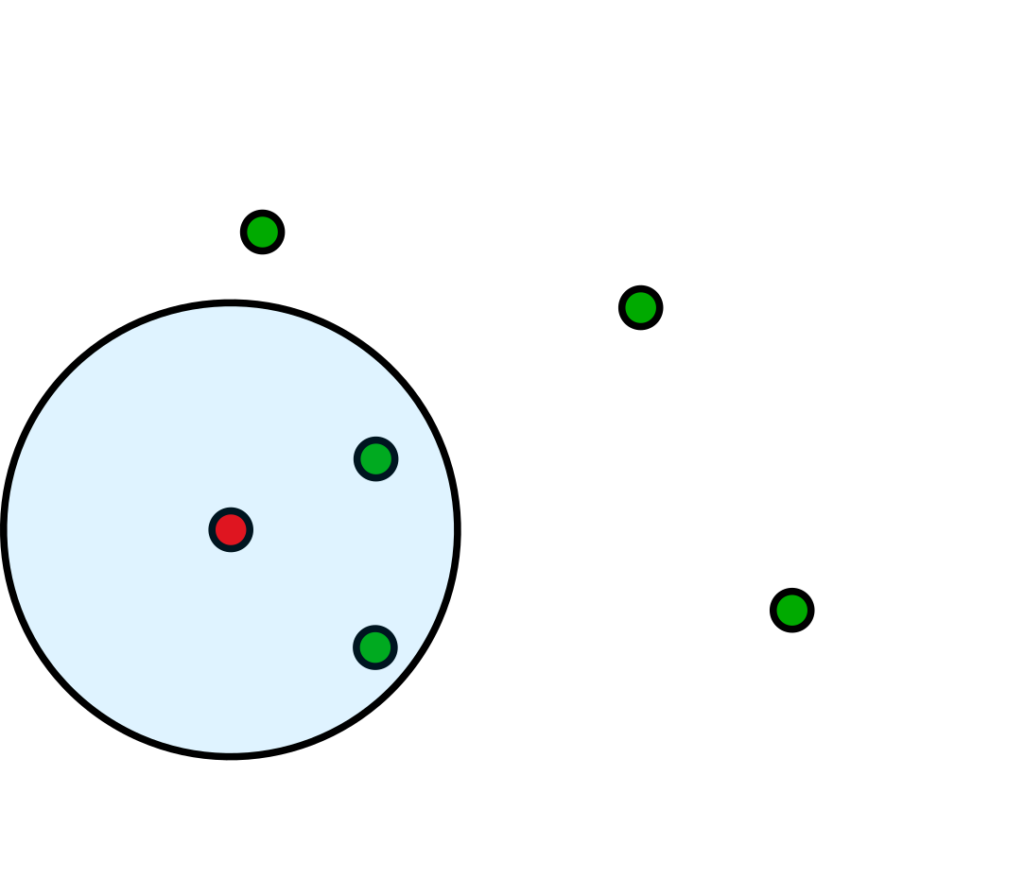

For example, in the image above, the two green vertices inside the large circle act forces upon the red vertex, and in order to find those vertices, we just expand a circle of radius k around the red vertex to gather all its neighbors. This type of nearest neighbor query can be easily implemented with a quadtree or a kd-tree over the particles that we traverse by only considering the inner nodes, and later the leaf nodes and particles that are within the large circle.

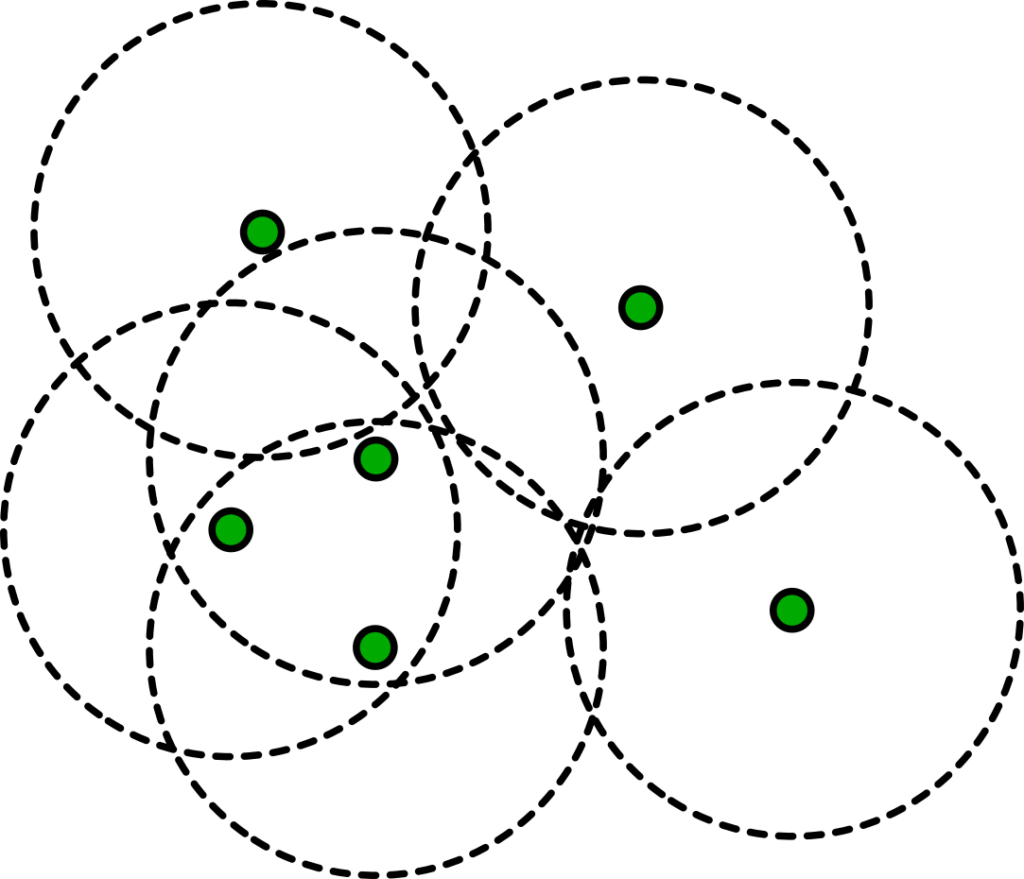

As it just so happens, for the force model that we chose, this large circle’s radius k is always the same, and this allows us to reverse that algorithm. Instead of building a hierarchy over particles, we instead build a bounding volume hierarchy over circles:

The circles have their centers at the vertex/particle positions, and the radius is the same as the search radius we used for the nearest neighbor query from before.

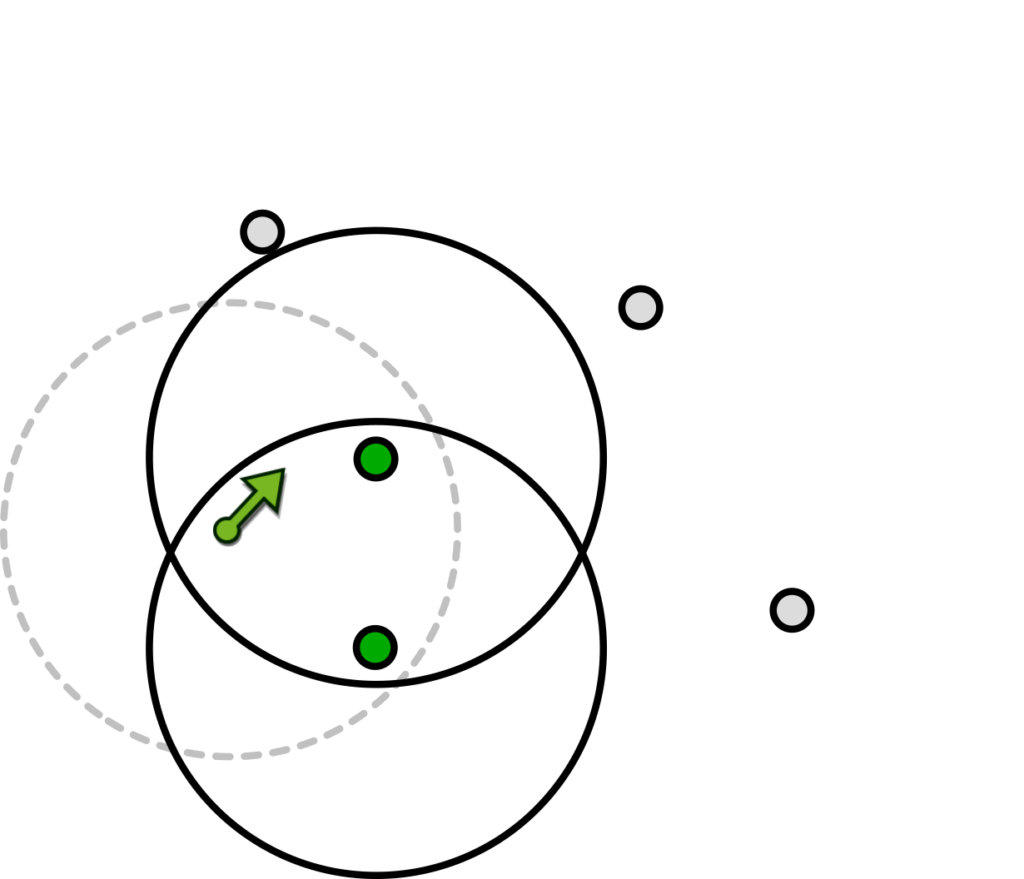

Now, instead of using a nearest neighbor point query–in software and therefore slow, also laborious to code up the kd-tree construction and traversal code–we can just shoot a ray through the bounding volume hierarchy (and obviously, that’s what we use RTX for).

It doesn’t matter in which direction the ray is pointing and we therefore choose an arbitrary vector with infinitesimal length to keep the number of BVH node tests minimal. It’s only important that the ray’s origin is located at the vertex position that we’re interested in:

The circles that overlap the ray origin belong to the particles that act forces on the vertex of interest (we’ll also hit the circle of the very particle that we’re testing for–here in gray–but just ignore that during traversal).

It turns out that this is really easy to implement with OptiX and RTX. We just build a user geometry over the circles and a BVH, and in the intersect program we check if the circles that we’re presented with overlap the query ray. If so, we’re in the range where mutual forces are active; in fact, this query will give us the exact same particles that we would have gathered using the NN query. Using RTX for this is actually quite fast, we beat a fairly optimized, hand-tuned CUDA LBVH implementation by several integer factors.

A limitation is that the force model we’re using is a bit outdated–yet very simple to implement-and as such, our study is a proof of concept; we’re already working on other force models like FMM that will converge faster than the simple model we used in the paper and we’re confident that we’ll also see decent speedups for those.

Obviously, all that works because the radius is the same, no matter which particle we’re evaluating forces for. That restriction is actually not so bad; most graph networks have weights associated with the edges, but not with the vertices. As such, vertices have no area (volume in 3D), and thus we can just assume that for our physical model the particles have no mass. It would of course be interesting to implement that approach for particles having a mass, but then we wouldn’t get away with such an easy query.

The paper will be presented at this year’s IEEE VIS (due to the pandemic they will stream the talks online and attendance is free). Sample code for the paper can be found on github: https://github.com/owl-project/owl-graph-drawing

Although the idea is relatively simple, it shows a nice way to use the NVIDIA RTX hardware extension for sth. completely different than rendering; I’m excited to see what the future brings on this front and what clever ideas people will come up with for that they can use ray tracing in a similar fashion, and I hope that this paper is a little inspiration for them!